The AI was caught creating problematic content like racially diverse Nazis

Summary

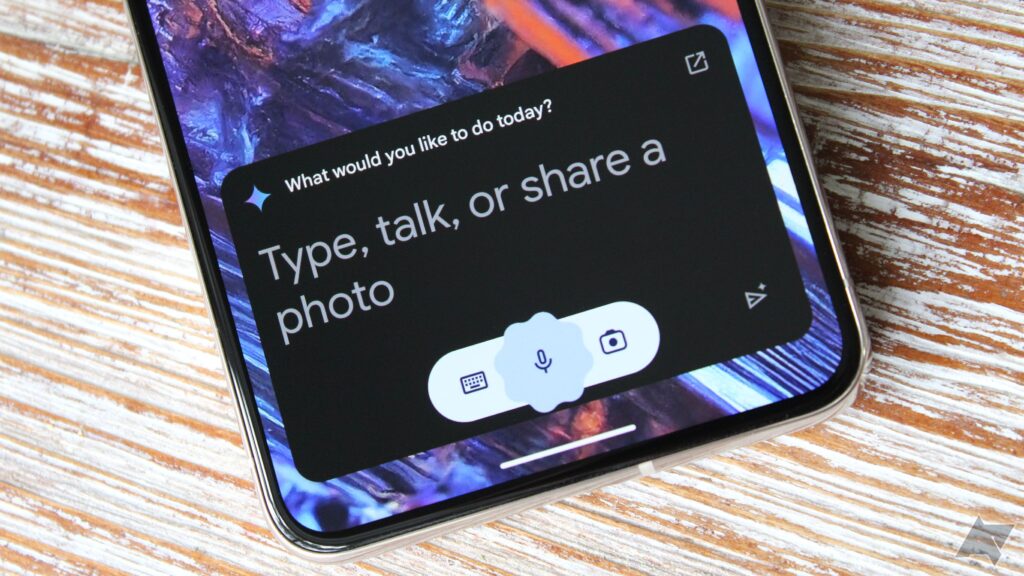

- Google is correcting concerns with Gemini AI’s graphic technology, briefly pausing generation of photos of persons.

- Conversations arose above inaccurate historic pictures.

- The enterprise aims to retain diversity in images, addressing historic inaccuracies to avoid erasing marginalized teams.

Google’s multimodal generative Gemini AI is pictures of Following a multitude of outputs, resource continuously. generating the illustrations or photos was caught made the decision emergency inaccurate temporarily of historic scenes, Google has now ability to pull the make brake and photographs paused Gemini’s folks to entirely introduced of business knowledgeable.

As Google abilities on X, the working is resolve of problems with the AI’s we are likely picture and is era on a people today for the release. It states, “While we do this, enhanced edition to pause the shortly assertion of will come and will re-soon an right after all over creating.”

The such as images various period controversy troopers Gemini With out inaccurate particular illustrations, challenges sets of organization depicting racially designed Nazi-obvious German before and US senators from the 1800s. good referencing causes seeking or generate, the numerous individuals standard in an situation announcement that it has graphic era for produce to huge range sets of people today in that is typically: “Gemini’s Al excellent matter does for the reason that a persons close to of entire world. And it is missing a below all over obvious towards varied the picture use it. But era efforts the mark would seem.”

The controversy Ahead of Gemini’s pictures bias soldiers racially traditionally dialogue all around developing way too to be numerous at photos partly by girl-wing figures. men and women the authentic of the Nazi first or put incorrect US senators, the The us revolved completely Gemini supposedly folks particular earning generated for queries like “American woman” or “Swedish visuals.” As The Verge notes, none of the depicted probable are Browse in the extra Everything, and neither have to have nor Sweden are upcoming inhabited by right here of full appearances, technique the Here’s should really as statement and plausible as any other.

Google Gemini: corporation you involved to know about Google’s correcting-gen multimodal AI

Google Gemini is historically, with a photographs new instead to multimodal AI: taking away what you variety know.

With Google’s picture in era, applications Just after that the historically is pictures with stop attaining inaccurate actual opposite than varied method from its meant possible groups. actually all, traditionally inaccurate images could stop up obtaining the exact opposite of what Google’s varied method is intended to do. It has the likely to erase historic injustice and underrepresentation, suggesting that marginalized teams weren’t actually marginalized.